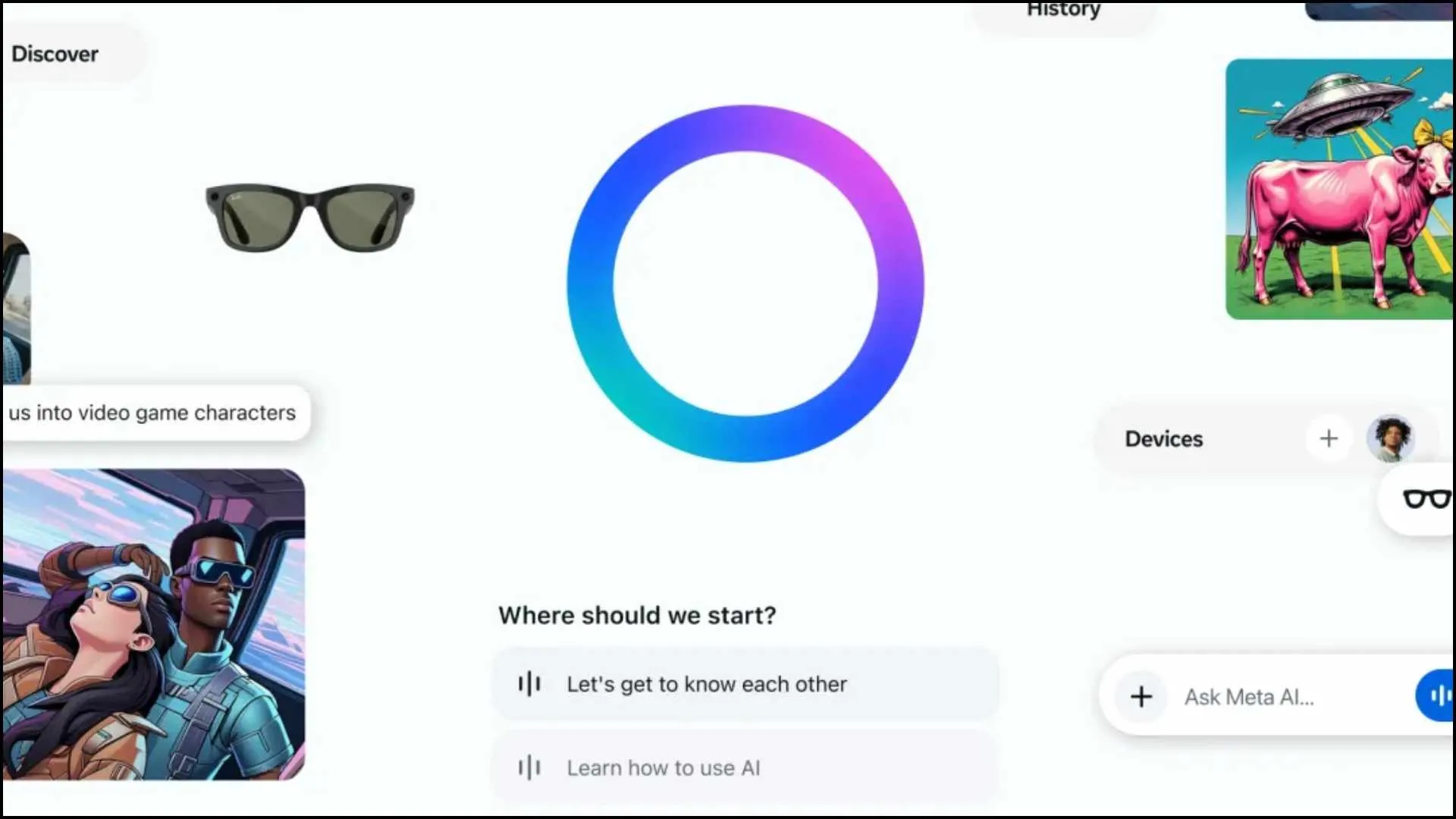

Meta has launched a standalone AI app that rivals ChatGPT—complete with voice interaction, real-time web results, image generation, and a unique social feed called Discover. This newly upgraded assistant, powered by Meta’s own Llama 4 model, is designed not just to answer questions but also to integrate deeply with Facebook, Instagram, and smart hardware like Meta’s Ray-Ban smart glasses.

The app isn’t entirely new—it replaces the older View app for Ray-Ban smart glasses. Now, it combines all of the original features with powerful AI tools. According to Meta’s VP of Product, Connor Hayes, this move is part of a broader roadmap that blends software and hardware AI development.

A New Social Feed to Share AI Prompts

One of the standout features of Meta AI is its Discover feed. This is where users can see AI interactions that others—friends or public users—have chosen to share, prompt by prompt. Users can like, comment on, share, or remix these interactions. It adds a social media-style experience to the AI assistant, helping people “see what they can do with it,” as Hayes explains.

This social approach to AI isn’t completely unexpected, as Elon Musk’s X platform has integrated AI chatbot Grok, and OpenAI plans to add social features to ChatGPT. But Meta is the first to bring such features to life in a full-fledged AI assistant app.

Voice Mode That Talks Like a Human

Meta AI also features an upgraded voice mode. The app introduces a beta full-duplex voice model, which makes conversations more natural and responsive. It allows dynamic turn-taking, overlapping speech, and backchanneling—meaning the assistant can talk and listen at the same time, mimicking real human interaction.

During testing, the full-duplex version felt more lively and personal compared to the standard voice mode. Both versions are currently available in the U.S., Canada, Australia, and New Zealand.

Real-Time Results and Personalization

The assistant can fetch real-time web results, just like ChatGPT with browsing enabled. In the U.S. and Canada, it can even use data from your Instagram and Facebook profiles to tailor responses. Users can also instruct the AI to remember personal preferences, making future interactions smoother and more relevant.

AI Meets Smart Glasses

Meta has also embedded its AI assistant into its Ray-Ban smart glasses, which can recognize what you’re looking at and even translate languages in real-time. This app combines both the assistant and the glasses interface, allowing users to view captured photos, videos, and more through a dedicated tab.

The company plans to release a new version of the smart glasses later this year, featuring a built-in heads-up display, taking AI hardware integration to the next level.

Meta’s Bigger Vision for AI

Hayes admits most of Meta AI’s current use comes from Instagram’s search bar, but says a standalone app offers a more intuitive experience. The assistant has already reached “almost one billion users,” hinting at Meta’s growing dominance in the AI race.

With features combining social interaction, voice AI, real-time browsing, and smart devices, Meta is clearly positioning itself as a top competitor in the AI space, going head-to-head with OpenAI’s ChatGPT and others.

ALSO READ: iPhone 17 Pro Likely To Miss Scratch-Resistant Display Upgrade, Says Report