Google DeepMind has unveiled two new artificial intelligence (AI) models in its Gemini Robotics family, designed to make robots smarter and more capable in the real world. Named Gemini Robotics-ER 1.5 and Gemini Robotics 1.5, the models work together to improve a robot’s reasoning, vision, and action.

Unlike earlier AI systems that combined planning and execution in one model, DeepMind’s new approach separates these tasks. Gemini Robotics-ER 1.5 acts as the planner or orchestrator, while Gemini Robotics 1.5 carries out tasks based on natural language instructions. This two-model system aims to reduce errors and delays often seen when a single AI handles both planning and performing actions.

We’re making robots more capable than ever in the physical world.

Gemini Robotics 1.5 is a levelled up agentic system that can reason better, plan ahead, use digital tools such as @Google Search, interact with humans and much more. Here’s how it works pic.twitter.com/JM753eHBzk

— Google DeepMind (@GoogleDeepMind) September 25, 2025

Gemini Robotics-ER 1.5 is a vision-language model (VLM) that can analyse a situation, create multi-step plans, and integrate external tools such as Google Search to make informed decisions. It excels in spatial understanding, allowing robots to navigate and manipulate their surroundings more effectively.

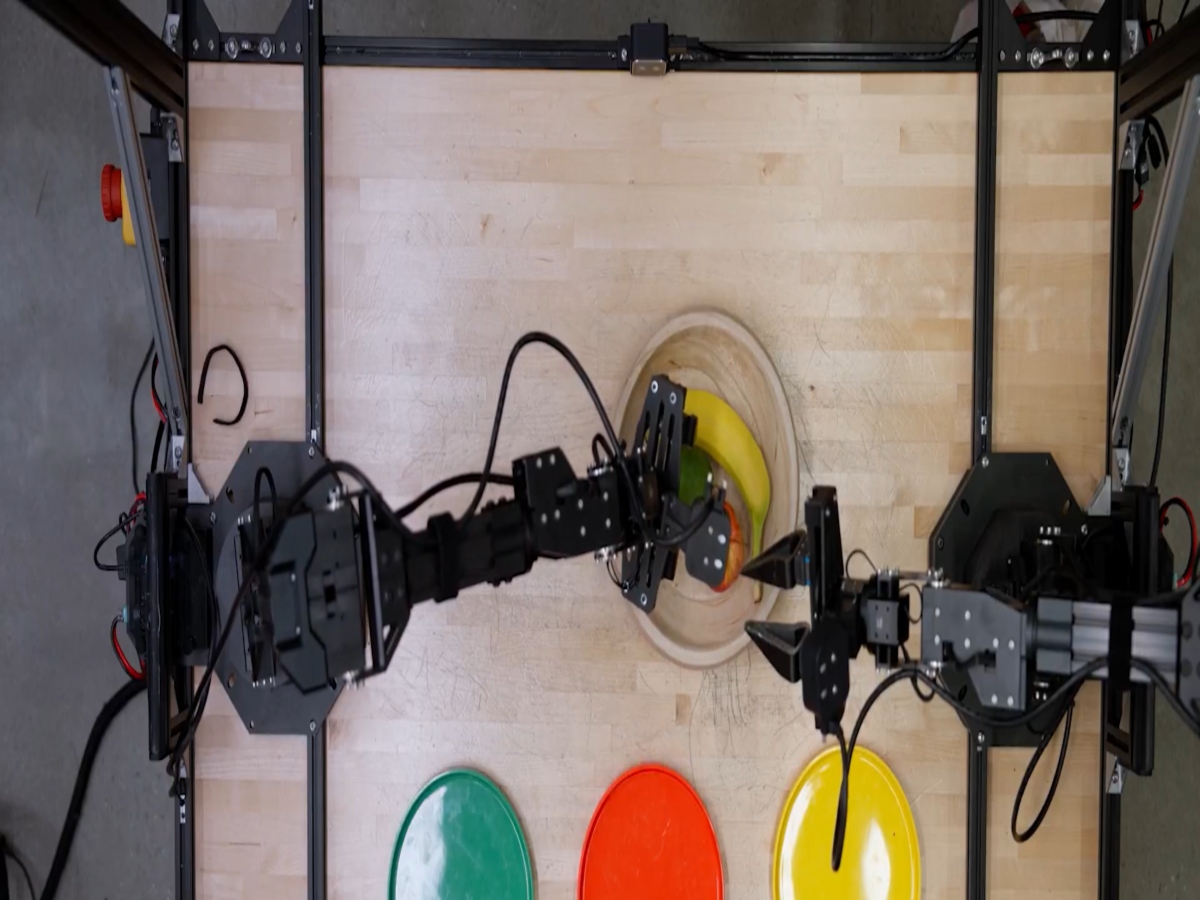

Once a plan is ready, Gemini Robotics 1.5, a vision-language-action (VLA) model, translates the instructions and visual information into motor commands. This enables robots to complete tasks efficiently while also explaining their actions in natural language. The models together allow robots to handle complex tasks step by step. For instance, a robot could sort items into compost, recycling, and trash bins by checking local recycling rules online.

DeepMind says the system is adaptable to robots of various shapes and sizes thanks to its spatial awareness and flexible design. Currently, developers can access the planner model, Gemini Robotics-ER 1.5, through the Gemini API in Google AI Studio. The task-executing model, Gemini Robotics 1.5, is available only to select partners.

Shivam Verma is a journalist with over three years of experience in digital newsrooms. He currently works at NewsX, having previously worked for Firstpost and DNA India. A postgraduate diploma holder in Integrated Journalism from the Asian College of Journalism, Chennai, Shivam focuses on international affairs, diplomacy, defence, and politics. Beyond the newsroom, he is passionate about football—both playing and watching—and enjoys travelling to explore new places and cuisines.