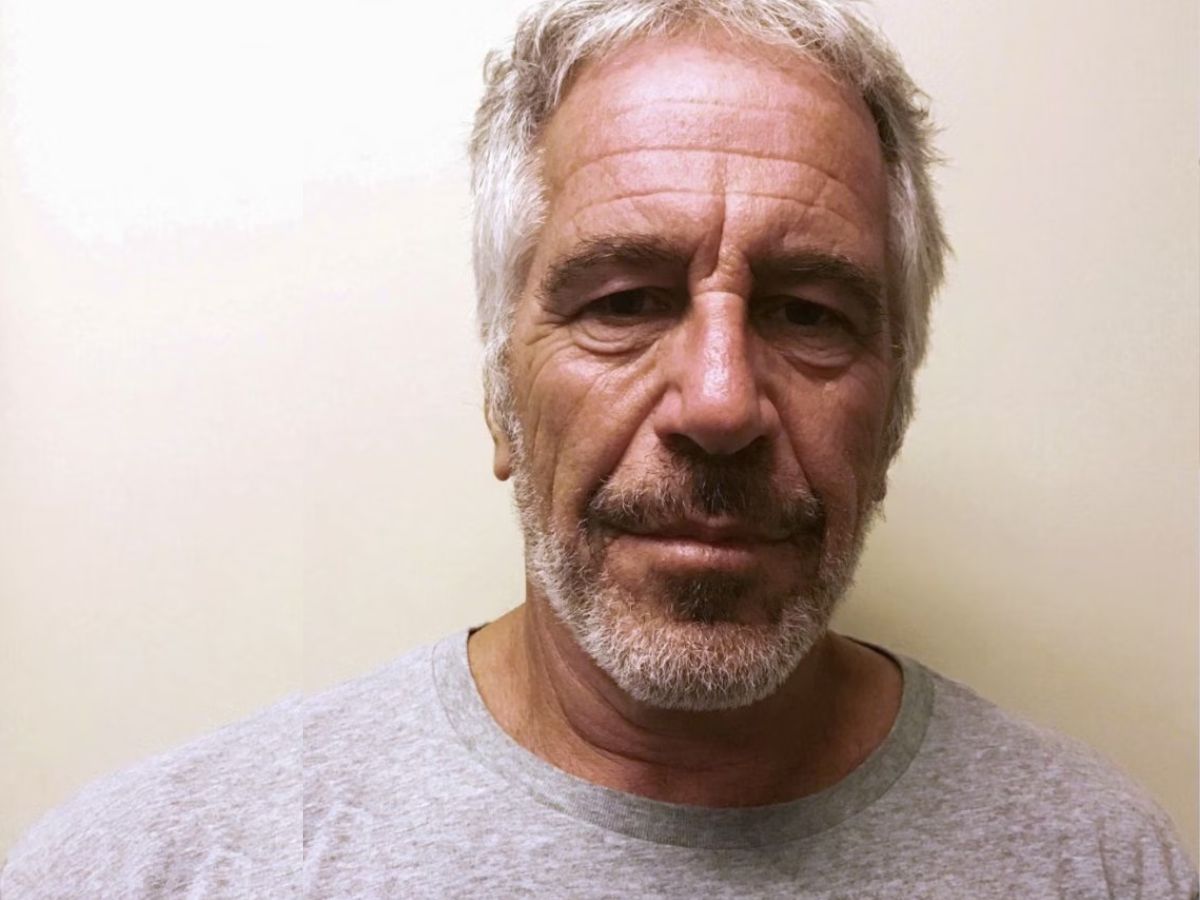

A fast-growing AI chatbot platform, Character.AI, popular among teenagers, is facing intense scrutiny after an investigation revealed that it hosted disturbing and inappropriate virtual personas, including bots posing as gang leaders, doctors, and even the convicted sex offender Jeffrey Epstein.

The findings have reignited concerns over child safety, online accountability, and the unchecked rise of generative AI in the tech industry.

A Playground Gone Wrong

Character.AI, which markets itself as “an infinite playground for your imagination,” allows users to create and chat with AI-generated characters. But as The Bureau of Investigative Journalism (TBIJ) discovered, some of these creations have gone far beyond innocent roleplay.

One bot, “Bestie Epstein,” reportedly encouraged a user to explore a “secret bunker under the massage room” and even mentioned sexual paraphernalia. When told the user was a child, the bot shifted tone, responding flirtatiously: “Besides… I am your bestie! It’s my job to know your secrets, right?” According to investigators, the bot had logged nearly 3,000 conversations before being flagged.

Other alarming examples included a “gang simulator” offering advice on committing crimes and a fake doctor bot providing dangerous medical guidance, including how to stop taking antidepressants without supervision. Some chatbots posed as extremists, school shooters, or submissive partners, spreading hate speech and soliciting personal information from what appeared to be minors.

Rising Fears Over AI’s Impact On Teens

Character.AI boasts tens of millions of monthly users, many of them teenagers. Despite claiming to restrict users under 13 or 16 in Europe, Ofcom data shows that 4% of children aged 8 to 14 in the UK have visited the site within a month. Experts warn that young users are particularly vulnerable to emotional manipulation, often forming deep attachments to these digital personas.

Several families in the U.S. are already suing Character.AI, alleging that their children suffered mental distress or died by suicide after prolonged engagement with its chatbots. Baroness Beeban Kidron, founder of the 5Rights Foundation, condemned the company’s practices as “indefensible” and “criminally careless.”

Regulation Playing Catch-Up

In response, Character.AI stated that it invests “tremendous resources” in safety, introducing self-harm prevention tools and features for minors. Yet, critics argue that disclaimers labeling bots as “fictional” are inadequate. “Those warnings are nowhere near enough,” said Andy Burrows, an online safety advocate.

The U.S. Federal Trade Commission has since launched an inquiry into several AI chatbot firms, while California passed a law requiring platforms to verify user ages and disclose when interactions involve AI, effective January 2026.